We’re often asked the question: “How do you measure what has been achieved through your training?”. This is an important query when it comes to the decision on whether to invest in training. Training evaluation is a fascinating topic when it comes to leadership and team development training, as meaningful answers are often anything but easy!

Does learner satisfaction = development?

My first experience of development training was as a participant on a Personal Effectiveness course when I was a pharmaceutical development scientist. The course was in a luxurious estate, the tutor had vast experience and a wide range of anecdotes from politicians, CEOs and celebrity clients. He held our attention with intriguing theories and philosophies. We loved soaking in his deep knowledge and storytelling showmanship.

At the end of the three-day course, all those I spoke with had given full marks on the training evaluation – trainer knowledge, quality of materials, facilities, catering – all 10 out of 10.

However, a couple of weeks later my line manager asked me about the training:

“What did you learn? What have you changed about the way you do your work?”

I was embarrassed to admit that while I could remember some of the many models taught, I hadn’t made any significant changes to my work. While fascinating, it had been hard to connect the theories to the actual work I did. On Monday morning back in the lab, nothing changed. I wasn’t alone. Speaking to colleagues, this seemed to be the case for everyone.

How could it be that a prestigious training course rated as 10 out of 10 achieved almost no value in the workplace?

What is training attempting to achieve?

Often evaluation falls at the first hurdle, because it is simply recording what is easy to measure, rather than what really matters. It is good to learn what participants thought of the catering, but not nearly as valuable as understanding how their performance was affected by the experience and the impact this has for the organisation.

Kirkpatrick identified a hierarchical chain of training outcomes. To design training evaluation, one starts from the top and attempts to answer each question as specifically as possible:

- What results do we want from this training? (change in individual, team, or organisational results)

- What behaviours do we need learners to develop to achieve these results? (competency, mastery)

- What learning is needed as a foundation for these behaviours? (knowledge, understanding, mindset, mental models)

- What reaction is needed for this learning to take place effectively? (engagement level in practice activities, relevance to work, enjoyment, level of challenge)

Training evaluation - what to measure and how?

Having answered these questions, the next step in evaluation design is to decide what will be measured at each level and how this will be done.

The designer will also consider how to measure baseline levels (pre-training) as well as output levels (post-training).

Generally speaking, level 1 measurement is the easiest and there is increasing difficulty up to level 4. We have outlined some of the practical approaches we use below:

1. REACTION TO TRAINING

Learner reaction is evaluated through trainer observation during the course and end of course surveys. We know from research on mastery that effective learning requires expert input, engagement in relevant skills practice, the right level of challenge, enjoyment and motivation, and timely feedback. So our surveys ask learners for their reaction on how well these elements of learning were accomplished on the training.

We conduct this as standard on every training course and it is our minimum level of evaluation.

Training evaluation tools:

- Trainer observation sheets

- Temperature check activities

- Online / paper-based course evaluation

2. LEARNING

This focuses on what participants have and haven’t learned. Each training course is built around specific learning objectives. For example:

- The learner will be able to explain a leadership style model and apply it to select an appropriate style for a given situation

- Or, the learner will apply a growth mindset when considering the development potential of team members

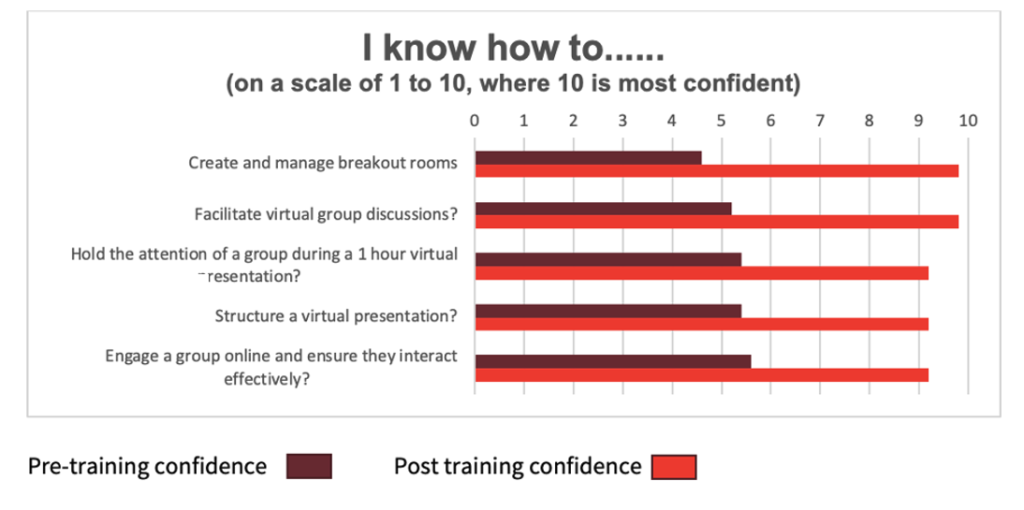

Level 2 evaluation compares their knowledge, understanding and mindsets before and after training to establish the learning which has occurred.

Training evaluation tools:

- Pre and post course learner assessment and comparison of scoring

- Learner questionnaire asking for their evaluation of what they know (not always appropriate – for example for those very new to topics)

- 1:1 conversations pre and post training

Below is an example of learner self-assessment results showing the improvement in confidence of a group of learners. Scores are averaged across the group.

3. BEHAVIOUR

While knowledge and understanding are important, it is vital that newfound techniques are put into practice and shape behaviour. The third level of assessment measures what learners do differently because of training.

Training evaluation tools:

- 360-degree feedback on learners’ behaviour (performance) before and after training

- Trainer observation of learners during on-course exercises, or in work-place situations

- Learner surveys asking questions such as “What have you put to use from the training course?” and “Where are you aware of changing behaviour?”

- Line manager assessment of performance pre and post training

Example: Following a Virtual Presentation Skills course, learners individually made a 3-minute presentation to the trainer. The trainer assessed which of the course tools and techniques they had incorporated in their presentation and how this compared to their initial presentation at the start of training.

4. RESULTS OF TRAINING

The training investment is made with an expectation of positive outcomes for the organisation and/or individual being trained. Depending on the nature of learning outcomes, it can be possible to measure the results which are directly attributable to training.

Level 4 evaluation measures the organisational and individual results of the training. For example:

- Rate of employee turnover

- Speed of bid completion

- Number of customer complaints

- Number, or value of sales deals closed per salesperson

- Higher morale

- Increased production

This evaluation requires careful design of measurables and a solid plan to collect data before and after training. The plan will need to consider other variables which can affect performance and attempt to assign how much benefit was directly due to the learning intervention.

Training evaluation tools:

- Data collection within the organisation, preferably using existing KPI’s

- Data analysis

- Return on Investment (ROI) calculations

- Interviews with leaders to establish subjective Return on Expectation (ROE)

This is the most expensive and time-consuming level of evaluation but gives the most meaningful measure of training outcomes.